Global Multimodal UI Market - Key Trends & Drivers Summarized

How Is Multimodal UI Enhancing Human-Computer Interaction?

Multimodal user interfaces (UIs) are transforming the way humans interact with technology by enabling seamless communication through multiple input methods, including voice, touch, gestures, and gaze tracking. Unlike traditional single-mode UIs, multimodal interfaces enhance accessibility, adaptability, and user engagement by allowing users to choose the most intuitive interaction method based on their context. The growing demand for hands-free and touchless interactions has fueled the adoption of multimodal UIs in smart devices, automotive infotainment systems, and industrial applications. In consumer electronics, smart assistants such as Alexa, Google Assistant, and Siri exemplify how voice recognition is being combined with touch and visual feedback to create more natural and responsive interactions. Meanwhile, in augmented reality (AR) and virtual reality (VR) environments, multimodal UI enhances user immersion by integrating voice commands, eye tracking, and motion gestures. As user expectations for seamless digital interactions increase, businesses are investing in sophisticated AI-driven multimodal systems to improve personalization and user satisfaction across various platforms.What Role Do Emerging Technologies Play in Multimodal UI Development?

The rapid advancement of artificial intelligence (AI), machine learning (ML), and natural language processing (NLP) has significantly contributed to the evolution of multimodal UI, making interactions more intelligent and context-aware. AI-driven voice assistants are now capable of understanding nuanced speech patterns and adapting to individual user preferences, improving the accuracy and efficiency of voice commands. Gesture recognition technology, powered by deep learning and computer vision, is enabling touchless navigation in smart homes, automotive systems, and healthcare applications. Additionally, advancements in biometric authentication, including facial and iris recognition, are enhancing security in multimodal interfaces. The integration of edge AI has further optimized multimodal UI by enabling real-time processing of voice and gesture inputs on local devices, reducing latency and improving response times. With the advent of 5G networks, multimodal UI is expected to become even more responsive and reliable, allowing for seamless interactions across connected ecosystems. As technology continues to evolve, multimodal UIs will play a crucial role in shaping the future of human-computer interaction, offering more immersive and intuitive experiences across industries.How Are Industry Trends and Consumer Preferences Shaping the Multimodal UI Market?

The shift towards more intuitive and personalized digital experiences has driven the widespread adoption of multimodal UI across various sectors, including healthcare, retail, automotive, and smart home automation. Consumers are increasingly demanding interfaces that offer frictionless interactions, leading to the integration of multimodal capabilities in wearable technology, gaming consoles, and virtual assistants. The automotive industry is a major driver of multimodal UI adoption, with voice-activated controls, gesture-based navigation, and haptic feedback being integrated into infotainment systems to enhance driver safety and convenience. In healthcare, multimodal interfaces are revolutionizing patient care by enabling touchless interactions, voice-guided medical procedures, and AI-powered diagnostics. The rise of omnichannel retailing has also influenced multimodal UI trends, with brands leveraging voice shopping, AR-enabled try-on experiences, and chatbot-driven customer support to enhance user engagement. As consumers seek more natural and efficient ways to interact with technology, businesses are prioritizing multimodal UI design to differentiate their products and deliver superior user experiences.What Are the Key Growth Drivers Fueling the Multimodal UI Market?

The growth in the global multimodal UI market is driven by several factors, including the increasing adoption of AI-powered virtual assistants, the proliferation of smart devices, and advancements in speech and gesture recognition technologies. The demand for accessibility-friendly interfaces has accelerated the development of multimodal UI solutions that cater to diverse user needs, including individuals with disabilities. The rapid expansion of IoT ecosystems has also contributed to market growth, as connected devices rely on multimodal interactions for seamless communication and control. Additionally, the expansion of smart city initiatives and autonomous vehicle technologies has created new opportunities for multimodal UI applications in public infrastructure and mobility solutions. Businesses are investing heavily in R&D to develop context-aware UIs that leverage real-time data analytics and predictive modeling to enhance user engagement. Moreover, regulatory frameworks promoting inclusive and ergonomic interface design are encouraging companies to prioritize multimodal accessibility. As consumer expectations for intuitive and adaptive interfaces continue to rise, the multimodal UI market is set for significant expansion, driving innovation in human-computer interaction and redefining the future of digital experiences.Report Scope

The report analyzes the Multimodal UI market, presented in terms of market value. The analysis covers the key segments and geographic regions outlined below.- Segments: Component Type (Multimodal UI Hardware, Multimodal UI Software, Multimodal UI Services); Interaction Type (Speech Recognition, Gesture Recognition, Eye Tracking, Facial Expression Recognition, Haptics / Tactile Interaction, Visual Interaction, Other Interaction Types).

- Geographic Regions/Countries: World; United States; Canada; Japan; China; Europe (France; Germany; Italy; United Kingdom; and Rest of Europe); Asia-Pacific; Rest of World.

Key Insights:

- Market Growth: Understand the significant growth trajectory of the Multimodal UI Hardware segment, which is expected to reach US$42.2 Billion by 2030 with a CAGR of a 19%. The Multimodal UI Software segment is also set to grow at 17.1% CAGR over the analysis period.

- Regional Analysis: Gain insights into the U.S. market, valued at $6.4 Billion in 2024, and China, forecasted to grow at an impressive 17.1% CAGR to reach $10.3 Billion by 2030. Discover growth trends in other key regions, including Japan, Canada, Germany, and the Asia-Pacific.

Why You Should Buy This Report:

- Detailed Market Analysis: Access a thorough analysis of the Global Multimodal UI Market, covering all major geographic regions and market segments.

- Competitive Insights: Get an overview of the competitive landscape, including the market presence of major players across different geographies.

- Future Trends and Drivers: Understand the key trends and drivers shaping the future of the Global Multimodal UI Market.

- Actionable Insights: Benefit from actionable insights that can help you identify new revenue opportunities and make strategic business decisions.

Key Questions Answered:

- How is the Global Multimodal UI Market expected to evolve by 2030?

- What are the main drivers and restraints affecting the market?

- Which market segments will grow the most over the forecast period?

- How will market shares for different regions and segments change by 2030?

- Who are the leading players in the market, and what are their prospects?

Report Features:

- Comprehensive Market Data: Independent analysis of annual sales and market forecasts in US$ Million from 2024 to 2030.

- In-Depth Regional Analysis: Detailed insights into key markets, including the U.S., China, Japan, Canada, Europe, Asia-Pacific, Latin America, Middle East, and Africa.

- Company Profiles: Coverage of players such as ABB Ltd., Advantech Co., Ltd., AECOM, Alstom SA, Bombardier Transportation and more.

- Complimentary Updates: Receive free report updates for one year to keep you informed of the latest market developments.

Some of the 32 companies featured in this Multimodal UI market report include:

- Aimesoft

- Amazon

- Anthropic

- Appen Limited

- Apple Inc.

- Clay

- Frog Design

- GestureTek

- Google LLC

- Human Media Lab

- Hume AI

- IBM Corporation

- Jina AI

- Leap Motion (Ultraleap)

- LinkUp Studio

- Meta Platforms, Inc.

- Microsoft Corporation

- OpenAI

- Siemens AG

- uSens, Inc.

This edition integrates the latest global trade and economic shifts into comprehensive market analysis. Key updates include:

- Tariff and Trade Impact: Insights into global tariff negotiations across 180+ countries, with analysis of supply chain turbulence, sourcing disruptions, and geographic realignment. Special focus on 2025 as a pivotal year for trade tensions, including updated perspectives on the Trump-era tariffs.

- Adjusted Forecasts and Analytics: Revised global and regional market forecasts through 2030, incorporating tariff effects, economic uncertainty, and structural changes in globalization. Includes historical analysis from 2015 to 2023.

- Strategic Market Dynamics: Evaluation of revised market prospects, regional outlooks, and key economic indicators such as population and urbanization trends.

- Innovation & Technology Trends: Latest developments in product and process innovation, emerging technologies, and key industry drivers shaping the competitive landscape.

- Competitive Intelligence: Updated global market share estimates for 2025, competitive positioning of major players (Strong/Active/Niche/Trivial), and refined focus on leading global brands and core players.

- Expert Insight & Commentary: Strategic analysis from economists, trade experts, and domain specialists to contextualize market shifts and identify emerging opportunities.

Table of Contents

Companies Mentioned (Partial List)

A selection of companies mentioned in this report includes, but is not limited to:

- Aimesoft

- Amazon

- Anthropic

- Appen Limited

- Apple Inc.

- Clay

- Frog Design

- GestureTek

- Google LLC

- Human Media Lab

- Hume AI

- IBM Corporation

- Jina AI

- Leap Motion (Ultraleap)

- LinkUp Studio

- Meta Platforms, Inc.

- Microsoft Corporation

- OpenAI

- Siemens AG

- uSens, Inc.

Table Information

| Report Attribute | Details |

|---|---|

| No. of Pages | 140 |

| Published | February 2026 |

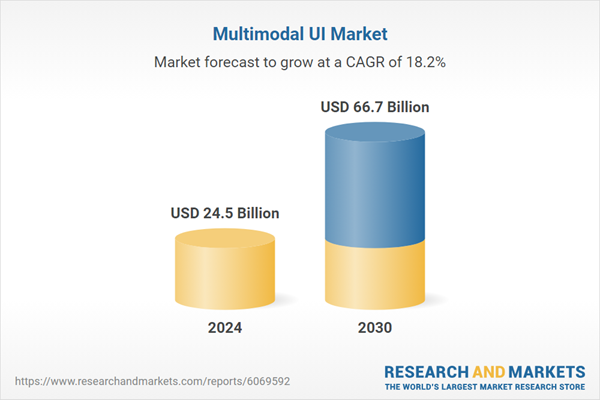

| Forecast Period | 2024 - 2030 |

| Estimated Market Value ( USD | $ 24.5 Billion |

| Forecasted Market Value ( USD | $ 66.7 Billion |

| Compound Annual Growth Rate | 18.2% |

| Regions Covered | Global |