Speak directly to the analyst to clarify any post sales queries you may have.

Concise contextual introduction that frames the urgency and strategic relevance of AI detector technologies across enterprise risk, compliance, and content integrity initiatives

This executive summary introduces the evolving discipline of AI detection and situates it within current enterprise priorities of content integrity, regulatory compliance, and operational resilience. Organizations across sectors increasingly treat AI detection not as a niche capability but as a core risk management function that interfaces with data governance, incident response, and trust frameworks. As synthetic media and automated content generation proliferate, detection solutions are being adopted to preserve brand reputation, enforce policy, and reduce exposure to fraud and manipulation.Contextual clarity is essential: today’s detection landscape spans hardware components that support high-throughput inference, services that integrate detection into business workflows, and software tools that offer analytics, model management, and visualization capabilities. Moreover, technological diversity-from deep learning to more deterministic rule-based approaches-creates a spectrum of trade-offs between explainability, latency, and robustness. As a result, procurement teams must evaluate both technical fit and operational readiness. This introduction frames the subsequent sections by underscoring that effective adoption blends technological capability with governance, vendor strategy, and practical deployment choices, and that near-term decisions will shape long-term program durability and stakeholder trust.

Analytical overview of transformative shifts reshaping the AI detection landscape driven by model evolution, regulatory pressure, threat actors, and cross-industry adoption

The AI detection landscape is undergoing transformative shifts driven by advances in model architectures, adversarial dynamics, and increasingly prescriptive regulatory attention. On the technology front, transformer-based and multimodal models have boosted detection accuracy for complex synthetic content, while research into robustness and interpretability has accelerated demand for explainable outputs that security and compliance teams can action. Concurrently, the adversarial ecosystem has matured: generative capabilities have become more accessible, and threat actors increasingly tailor content to evade automated screening, prompting defenders to invest in ensemble approaches that combine statistical, behavioral, and provenance signals.Regulatory pressure and industry standards are another major inflection point. Policymakers are moving from high-level principles to concrete requirements for provenance, labeling, and auditability, which in turn incentivizes vendors to embed logging, chain-of-custody controls, and human-in-the-loop workflows. Operationally, enterprises are rethinking where detection sits: some centralize capabilities within security operations centers, while others distribute lightweight inference to edge and customer-facing systems. Finally, vendor ecosystems are consolidating in some segments even as niche specialists emerge to meet vertical use cases. Collectively, these forces are reshaping buyer expectations, contractual terms, and the architecture of detection platforms.

Assessment of cumulative implications of United States tariffs announced in 2025 on supply chains, technology sourcing strategies, and global deployment choices for AI detection tools

The policy environment and trade instruments introduced in the United States during 2025 created a new set of considerations for procurement, supply chain resilience, and vendor engagement in the AI detection ecosystem. Hardware-dependent components such as inference accelerators, specialized sensors, and integrated appliances faced increased cost and procurement complexity, which led many buyers to re-evaluate total cost of ownership and to accelerate migration to cloud-based or hybrid delivery models where feasible. Software-centric components and cloud-native services experienced relative insulation from direct tariff impacts, yet they were affected indirectly through licensing negotiations, local data processing requirements, and changes in vendor support footprints.Practically speaking, organizations adapted in three principal ways. First, procurement teams diversified their supplier base and examined alternative manufacturing geographies to mitigate single-source exposure. Second, architecture decisions shifted in favor of modularity: enterprises prioritized solutions that decouple hardware acceleration from core detection algorithms, enabling easier substitution in response to tariff-driven supply disruptions. Third, procurement cycles lengthened as legal and compliance teams scrutinized contractual clauses related to import duties, maintenance, and indemnities. These cumulative impacts emphasize the importance of scenario planning and of integrating trade-policy sensitivity into vendor selection and deployment roadmaps.

Insightful segmentation analysis revealing how product types, technologies, ownership models, pricing, applications, industries, organization size and deployment choices influence adoption

A nuanced segmentation analysis reveals how adoption pathways and value realization vary across product, technology, ownership, pricing, application, industry, organization size, and deployment dimensions. Based on product type, buyers evaluate Hardware across controllers, modules, and sensors when low-latency or edge inference is required, while Services are selected for consulting, integration, and ongoing support to operationalize detection into business processes; Software choices emphasize analytics, management, and visualization for continuous monitoring and decisioning. Based on technology, deep learning approaches excel at complex pattern recognition in audio and video deepfake detection, machine learning offers balanced performance with manageable explainability trade-offs, and rule-based & linguistic systems provide precise, auditable outcomes for text-focused malicious content and plagiarism use cases.Model ownership is a strategic axis: open source models can accelerate experimentation and transparency, whereas proprietary models often deliver turnkey performance and service-level assurances. Pricing models-freemium, subscription, and usage-based-shape adoption velocity and pilot-to-scale economics, influencing whether organizations commit to long-term engagements or prefer flexible, consumption-driven arrangements. Application segmentation clarifies technical requirements; deepfake detection requires multimodal signals with separate attention to audio and video pipelines, malicious content detection demands real-time throughput for fake news and hate speech filtering, and plagiarism detection emphasizes textual similarity and provenance. End-user industry differences are material: banking and financial services prioritize fraud and regulatory controls, energy and utilities focus on infrastructure integrity, government and defense emphasize provenance and attribution, healthcare requires privacy-preserving detection, IT & telecom look for scalable inline solutions, manufacturing values integration with operational systems, and retail prioritizes brand protection and customer trust. Organization size influences governance and resource allocation, with large enterprises often adopting comprehensive, multi-vendor strategies while small and medium enterprises prefer simplified, managed offerings. Deployment type remains a decisive factor: cloud solutions accelerate time-to-value and scale, while on-premise deployments-appliance-based or server-based-are chosen for data residency, latency, or regulatory reasons. Integrating these segmentation lenses allows leaders to tailor roadmaps that balance capability, cost, and compliance.

Region-specific strategic intelligence highlighting market dynamics, adoption patterns, policy influences and ecosystem maturity across Americas, Europe Middle East & Africa and Asia-Pacific

Regional dynamics shape both strategic priorities and tactical implementation choices for AI detection solutions. In the Americas, a combination of market-driven innovation and mature cloud infrastructure has produced rapid adoption of cloud-native detection services, especially among technology-forward enterprises that prioritize scalability and integration with analytics platforms. Regulatory focus in certain jurisdictions has encouraged investments in provenance and audit capabilities, and cross-border data flows continue to influence deployment footprints. In contrast, Europe, Middle East & Africa exhibits heterogeneous demand driven by varying regulatory regimes and compliance priorities; European markets, in particular, emphasize privacy, data protection, and explainability, prompting more on-premise deployments or hybrid configurations to meet local sovereignty requirements. Meanwhile, Gulf and select African markets show growing interest in specialized solutions for media verification and government use cases.Asia-Pacific represents a diverse and high-growth axis where adoption is often driven by large-scale platform operators and telecom providers seeking to embed detection capabilities at scale. The region features a mix of centralized cloud deployments and highly localized, on-premise systems where latency, language diversity, and local regulatory mandates matter. Across all regions, partnerships between vendors, systems integrators, and local service providers are increasingly critical to navigate procurement norms, language and cultural nuances, and compliance requirements. Consequently, a geography-aware go-to-market and delivery model remains a decisive element of successful deployments.

Competitive company intelligence summarizing strategic moves, product differentiation, partnerships, and capability gaps among leading vendors and emerging specialists

Competitive company insights emphasize differentiation across technology stacks, go-to-market approaches, and partnership ecosystems. Leading vendors typically articulate clear value propositions around model performance for multimodal detection, integrated observability and logging for forensic analysis, and managed services that reduce operational friction for enterprise buyers. Emerging specialists differentiate through verticalized capabilities such as healthcare-grade privacy controls, defense-oriented provenance and attribution, or retail-focused customer experience protection. Partnerships with cloud providers, systems integrators, and telecom operators have become a significant channel for scaling deployments and addressing regional compliance constraints.Capability gaps remain in areas such as explainable detection outputs that satisfy auditors, standardized benchmarking for adversarial resilience, and turnkey integration with incident response and legal workflows. Vendors that accelerate remediation workflows by providing contextualized alerts, trustable evidence chains, and configurable human-in-the-loop processes gain traction with risk-averse customers. Moreover, companies that offer flexible commercial terms-balancing subscription predictability with usage-based scalability-are better positioned to support both large enterprises and small and medium organizations. Strategic roadmaps that prioritize interoperability, transparent model lineage, and robust support models will determine which companies capture sustained enterprise engagement.

Actionable recommendations for industry leaders to accelerate responsible deployment, strengthen detection pipelines, and operationalize trust and governance across organizations

Industry leaders should adopt a pragmatic, phased approach that aligns technical selection with governance, procurement, and operational readiness. Begin by establishing clear use-case prioritization that links detection objectives to business outcomes such as fraud reduction, brand protection, or regulatory compliance. Proceed with architecture choices that favor modularity: select detection engines and models that can be swapped or supplemented, adopt standardized APIs for integration, and design data flows that preserve provenance and auditability. Simultaneously, operationalize governance by defining clear escalation paths, human review thresholds, and retention policies for evidence and logs.Leaders must also prepare for policy and macroeconomic volatility by stress-testing vendor contracts for tariff exposure, support continuity, and regional compliance. Invest in staff capabilities by training security, legal, and content teams on threat patterns and model limitations, and develop playbooks that balance automation with human oversight. Finally, pursue vendor partnerships that offer transparent roadmaps, composable pricing options, and strong integration support to accelerate pilots into production. These steps will help organizations scale detection capabilities while preserving control, maintainability, and stakeholder trust.

Transparent research methodology outlining data sources, qualitative and quantitative approaches, validation techniques, and limitations informing the analysis and conclusions

The research methodology underpinning this summary combines multi-method data collection, expert validation, and iterative synthesis to ensure robust, evidence-based conclusions. Primary inputs included structured interviews with practitioners across industries, technology reviews of representative detection platforms, and in-depth vendor briefings that illuminated product roadmaps and integration patterns. Secondary inputs encompassed peer-reviewed research, public policy documents, and operational guidance from standards-setting organizations to contextualize regulatory and governance trends. Triangulation across these sources enabled the team to cross-validate claims about technology capabilities, deployment preferences, and operational challenges.Analytical techniques included comparative capability mapping, scenario analysis for policy and tariff impacts, and qualitative segmentation to surface distinct buyer archetypes. Validation steps involved convening independent domain experts to review assumptions about adversarial resilience, model explainability, and supply-chain sensitivity. The methodology also acknowledges limitations: rapidly evolving model capabilities and emergent regulatory initiatives may alter assumptions between research cycles, and vendor roadmaps can shift in response to competitive and policy pressures. Consequently, the findings emphasize directional insights and strategic imperatives rather than precise predictions, and they are intended to inform iterative planning and ongoing market monitoring.

Concluding synthesis that integrates strategic implications, risk considerations, and priority next steps for stakeholders navigating the evolving AI detection environment

In conclusion, AI detection is now a foundational capability for managing the risks and opportunities introduced by synthetic media and automated content generation. The technology landscape presents a diverse set of trade-offs across accuracy, explainability, latency, and cost, and the right combination of hardware, services, and software depends on an organization’s regulatory context, industry-specific needs, and operational maturity. Tariff-driven supply chain shifts in 2025 reinforced the need for architectural modularity and supplier diversification, while regional policy differences continue to shape deployment patterns and vendor selection.Looking ahead, organizations that prioritize composable architectures, transparent governance, and vendor relationships that emphasize interoperability and evidence-based performance will be best positioned to scale detection capabilities. Operational preparedness-through clear escalation paths, training, and integration with response workflows-will distinguish successful programs from ad hoc implementations. Ultimately, the effective application of AI detection depends as much on disciplined program management and cross-functional coordination as it does on raw model performance, and stakeholders should treat detection programs as ongoing, adaptive investments rather than one-off technology purchases.

Table of Contents

7. Cumulative Impact of Artificial Intelligence 2025

20. China AI Detector Market

Companies Mentioned

- BrandWell

- Caesar Labs, Inc.

- Copyleaks Ltd.

- Crossplag L.L.C

- DupliChecker.com, Inc.

- GPTZero Labs, Inc.

- Grammarly, Inc.

- OpenAI, Inc.

- Originality.AI, Inc.

- PlagiarismCheck.org by Teaching Writing Online Ltd

- PlagScan GmbH

- QuillBot by Learneo, Inc.

- Sapling

- Smodin LLC

- Turnitin, LLC

- Undetectable Inc.

- Unicheck, Inc.

- Winston AI inc.

- ZeroGPT by OLIVE WORKS LLC

Table Information

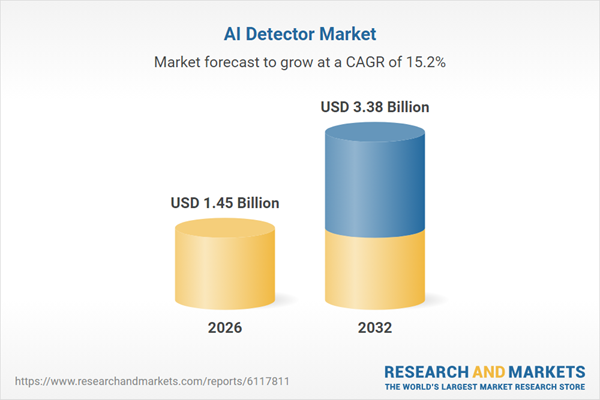

| Report Attribute | Details |

|---|---|

| No. of Pages | 182 |

| Published | January 2026 |

| Forecast Period | 2026 - 2032 |

| Estimated Market Value ( USD | $ 1.45 Billion |

| Forecasted Market Value ( USD | $ 3.38 Billion |

| Compound Annual Growth Rate | 15.1% |

| Regions Covered | Global |

| No. of Companies Mentioned | 19 |