AI Inference Market

The AI inference market spans data-center, edge, and on-device compute that turns trained models into real-time decisions across search, content generation, fraud detection, vision, autonomous systems, customer service, and industrial automation. Solutions combine accelerators (GPUs, custom ASICs/NPUs, FPGAs), high-bandwidth memory, low-latency fabrics, model-serving software, observability, and cost controls tuned to latency, throughput, and energy targets. Top applications include enterprise copilots, contact-center assistants, dynamic recommendation, ad ranking, anomaly detection, and perception for robots, vehicles, cameras, and mobile devices. Trends emphasize smaller, faster, cheaper inference via quantization (INT8/FP8/low-bit), distillation, pruning/sparsity, retrieval-augmented generation, and model routing that chooses the smallest model meeting an SLO. At the platform layer, compilers and runtimes optimize kernels per target silicon; serverless and autoscaling frameworks right-size capacity; and vector databases, caching, and guardrails improve answer quality and unit economics. Differentiation is shifting from peak TOPS to sustained performance under production loads - mixed batch sizes, long-context tokens, memory pressure, and multi-tenant isolation - along with toolchains for safety, governance, and audit. Competitive dynamics feature hyperscalers, silicon vendors, systems OEMs, inference-as-a-service providers, and open-source stacks aligning around standard model formats and portable APIs. Key challenges remain supply assurance for cutting-edge accelerators, observability across heterogeneous fleets, data privacy, content safety, and achieving predictable cost per request at scale. As enterprises industrialize AI beyond pilots, procurement prioritizes time-to-value, reliability, security posture, and verifiable business impact, with lifecycle services covering capacity planning, cost tuning, and continuous optimization.AI Inference Market Key Insights

- Latency and cost are the true KPIs. Winning deployments balance p95/p99 latency SLOs with predictable cost per request using batching, speculative decoding, KV-cache reuse, and token-aware autoscaling across heterogeneous pools.

- Smaller beats bigger (when it can). Distillation, quantization to low-bit formats, and sparsity deliver large efficiency gains; smart routers select compact models for routine tasks and escalate to larger experts only when needed.

- Hardware diversity is permanent. General-purpose GPUs coexist with domain-specific NPUs/ASICs and CPUs with AI extensions; compiler stacks and graph partitioning decide placement to maximize memory bandwidth and utilization.

- Memory is the bottleneck. KV-cache size, HBM capacity, and interconnect bandwidth drive long-context performance; techniques like paged attention, cache offload, and tensor parallelism reduce stalls and cost.

- Edge and on-device surge. Privacy, resiliency, and instantaneous response push inference onto PCs, phones, cameras, and robots; model compression and hardware NPUs enable offline or hybrid execution with cloud fallback.

- RAG professionalizes enterprise AI. Retrieval pipelines with vector stores, document chunking, and freshness policies improve factuality; governance adds redaction, PII handling, and prompt/content safety filters.

- Observability is non-optional. Tracing, token-level analytics, drift/factuality monitors, and safety incident logging turn AI into an operable service with clear runbooks for rollback and model swaps.

- Serverless and multi-tenant isolation mature. Sandboxing, rate shaping, and noisy-neighbor controls enable shared clusters; cold-start minimization and provisioned throughput protect critical paths.

- Open formats unlock portability. ONNX, MLIR, and standard attention kernels ease cross-silicon moves; containerized runtimes and IaC simplify multi-cloud/hybrid deployments and disaster recovery.

- Security and compliance gate deals. Secrets management, signed models, SBOMs, policy-as-code, and moderated output pipelines align inference with enterprise risk, audit, and regional data-sovereignty requirements.

AI Inference Market Reginal Analysis

North America

Adoption is led by hyperscale clouds, SaaS platforms, and data-rich enterprises building copilots and personalization engines. Buyers emphasize hybrid architectures, fine-tuned proprietary models, and rigorous observability. Procurement weighs silicon roadmaps, software portability, and support for safety, governance, and audit - often delivered through managed inference services with enterprise SLAs.Europe

Regulatory focus on data protection, model accountability, and sustainability shapes deployment choices. Enterprises prefer interpretable pipelines, energy-efficient hardware, and on-prem or sovereign-cloud options. Brownfield integration with existing analytics stacks and documented risk controls are must-haves, particularly in financial services, healthcare, public sector, and industrials.Asia-Pacific

A dense manufacturing and device ecosystem accelerates edge/on-device AI, while large platforms drive consumer-scale inference in commerce, media, and messaging. Cost-optimized accelerators and rapid iteration cycles support SMEs alongside advanced deployments in Japan, Korea, and Australia. Telecoms pilot AI at the network edge for traffic engineering and customer experience.Middle East & Africa

National digital programs and smart-city initiatives catalyze AI services in government, finance, energy, and airports. Buyers value turnkey platforms with strong security posture, multilingual capabilities, and local-language retrieval. Harsh-environment edge cases (heat, dust, intermittent links) favor ruggedized systems and hybrid cloud patterns.South & Central America

Adoption is paced by banking, telco, retail, and public services aiming to improve service quality and reduce operating costs. Budget sensitivity encourages managed services, usage-based pricing, and model-size right-sizing. Regional integrators play a key role in data preparation, RAG tuning for local content, operator training, and ongoing cost optimization.AI Inference Market Segmentation

By Computer

- GPU

- CPU

- FPGA

- NPU

- TPU

- FSD

- INFERENTIA

- T-HEAD

- MTIA

- LPU

- Others

By Memory

- DDR

- HBM

By Network

- Network Adapters

- Interconnects

By Deployment

- On-Premises

- Cloud

- Edge

By Application

- Generative Ai

- Machine Learning

- Natural Language Processing

- Computer Vision

By End-User

- Consumer

- Cloud Service Providers

- Enterprises

Key Market players

NVIDIA, AMD, Intel, Qualcomm, Google (TPU), AWS (Inferentia/Trainium), Microsoft (Azure AI Accelerator), Meta (MTIA), Huawei (Ascend), Alibaba T-Head, Baidu (Kunlun), Tenstorrent, Cerebras, Graphcore, SambaNova, Groq, Hailo, Mythic, BirenAI Inference Market Analytics

The report employs rigorous tools, including Porter’s Five Forces, value chain mapping, and scenario-based modelling, to assess supply-demand dynamics. Cross-sector influences from parent, derived, and substitute markets are evaluated to identify risks and opportunities. Trade and pricing analytics provide an up-to-date view of international flows, including leading exporters, importers, and regional price trends.Macroeconomic indicators, policy frameworks such as carbon pricing and energy security strategies, and evolving consumer behaviour are considered in forecasting scenarios. Recent deal flows, partnerships, and technology innovations are incorporated to assess their impact on future market performance.

AI Inference Market Competitive Intelligence

The competitive landscape is mapped through proprietary frameworks, profiling leading companies with details on business models, product portfolios, financial performance, and strategic initiatives. Key developments such as mergers & acquisitions, technology collaborations, investment inflows, and regional expansions are analyzed for their competitive impact. The report also identifies emerging players and innovative startups contributing to market disruption.Regional insights highlight the most promising investment destinations, regulatory landscapes, and evolving partnerships across energy and industrial corridors.

Countries Covered

- North America - AI Inference market data and outlook to 2034

- United States

- Canada

- Mexico

- Europe - AI Inference market data and outlook to 2034

- Germany

- United Kingdom

- France

- Italy

- Spain

- BeNeLux

- Russia

- Sweden

- Asia-Pacific - AI Inference market data and outlook to 2034

- China

- Japan

- India

- South Korea

- Australia

- Indonesia

- Malaysia

- Vietnam

- Middle East and Africa - AI Inference market data and outlook to 2034

- Saudi Arabia

- South Africa

- Iran

- UAE

- Egypt

- South and Central America - AI Inference market data and outlook to 2034

- Brazil

- Argentina

- Chile

- Peru

Research Methodology

This study combines primary inputs from industry experts across the AI Inference value chain with secondary data from associations, government publications, trade databases, and company disclosures. Proprietary modeling techniques, including data triangulation, statistical correlation, and scenario planning, are applied to deliver reliable market sizing and forecasting.Key Questions Addressed

- What is the current and forecast market size of the AI Inference industry at global, regional, and country levels?

- Which types, applications, and technologies present the highest growth potential?

- How are supply chains adapting to geopolitical and economic shocks?

- What role do policy frameworks, trade flows, and sustainability targets play in shaping demand?

- Who are the leading players, and how are their strategies evolving in the face of global uncertainty?

- Which regional “hotspots” and customer segments will outpace the market, and what go-to-market and partnership models best support entry and expansion?

- Where are the most investable opportunities - across technology roadmaps, sustainability-linked innovation, and M&A - and what is the best segment to invest over the next 3-5 years?

Your Key Takeaways from the AI Inference Market Report

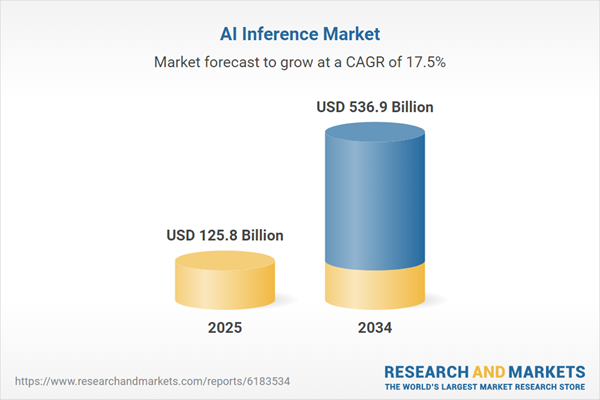

- Global AI Inference market size and growth projections (CAGR), 2024-2034

- Impact of Russia-Ukraine, Israel-Palestine, and Hamas conflicts on AI Inference trade, costs, and supply chains

- AI Inference market size, share, and outlook across 5 regions and 27 countries, 2023-2034

- AI Inference market size, CAGR, and market share of key products, applications, and end-user verticals, 2023-2034

- Short- and long-term AI Inference market trends, drivers, restraints, and opportunities

- Porter’s Five Forces analysis, technological developments, and AI Inference supply chain analysis

- AI Inference trade analysis, AI Inference market price analysis, and AI Inference supply/demand dynamics

- Profiles of 5 leading companies - overview, key strategies, financials, and products

- Latest AI Inference market news and developments

Additional Support

With the purchase of this report, you will receive:- An updated PDF report and an MS Excel data workbook containing all market tables and figures for easy analysis.

- 7-day post-sale analyst support for clarifications and in-scope supplementary data, ensuring the deliverable aligns precisely with your requirements.

- Complimentary report update to incorporate the latest available data and the impact of recent market developments.

This product will be delivered within 1-3 business days.

Table of Contents

Companies Mentioned

- NVIDIA

- AMD

- Intel

- Qualcomm

- Google (TPU)

- AWS (Inferentia/Trainium)

- Microsoft (Azure AI Accelerator)

- Meta (MTIA)

- Huawei (Ascend)

- Alibaba T-Head

- Baidu (Kunlun)

- Tenstorrent

- Cerebras

- Graphcore

- SambaNova

- Groq

- Hailo

- Mythic

- Biren

Table Information

| Report Attribute | Details |

|---|---|

| No. of Pages | 160 |

| Published | November 2025 |

| Forecast Period | 2025 - 2034 |

| Estimated Market Value ( USD | $ 125.8 Billion |

| Forecasted Market Value ( USD | $ 536.9 Billion |

| Compound Annual Growth Rate | 17.5% |

| Regions Covered | Global |

| No. of Companies Mentioned | 19 |